Aider and the Future of Coding: Open-Source, Affordable, and Local LLMs

The landscape of AI coding is rapidly evolving, with tools like Cursor gaining popularity for multi-file editing and copilot for AI-assisted autocomplete. However, these solutions are both closed-source and require a subscription.

This blog post will explore Aider, an open-source AI coding tool that offers flexibility, cost-effectiveness, and impressive performance, especially when paired with affordable, free, and local LLMs like DeepSeek, Google Gemini, and Ollama.

Aider: Open Source, Customizable, and Efficient

Unlike closed-source alternatives, Aider is free, open-source, and highly customizable. Developed by engineer Paul, Aider runs directly in the terminal and provides granular control over the AI coding process. What is particularly nice about Aider is that it strips away much of the baggage that other programs may have. It does not feel like a replacement to vscode but more of a co-application to interface with LLMs.

One of Aider’s key strengths lies in its efficient use of LLMs. Aider allows developers to closely monitor token usage and costs, ensuring optimal resource allocation. This is crucial as the cost of LLMs continues to decrease while context windows expand to accommodate larger codebases.

The Rise of Affordable and Accessible LLMs

The limitation (or benefit depending on your perspective) is that Aider does require access to LLMs in some shape or form. The recommended approach is to use an OpenAI or Claude API key to use 4o-turbo or sonnet etc. At the moment these prestige class models can incur significant costs when working with large repos. Here we detail some very cheap or even free approaches that can be flexibly applied in Aider.

DeepSeek-Coder-v2: This open-source coding LLM consistently ranks high in benchmarks, often surpassing even GPT-4 in specific coding tasks. DeepSeek-Coder-v2 boasts support for 338 programming languages and a 128K context window, making it a formidable contender in the AI coding space. The major boon here is that DeepSeek, while not free, is certainly close to it! ($0.14/$0.28 per million tokens for input/output, respectively). This is cheaper than 4o-turbo or haiku while offering sonnet-like performance. While I personally pay for a full-fat subscription having really cheap API access is very handy for many tasks and I struggled to deplete the £10 quota I added for these tests.

Google Gemini: Google’s Gemini offers developers a free and accessible way to integrate powerful language capabilities into their applications. Obtaining a Gemini API key is a straightforward process, allowing developers to leverage Google’s cutting-edge AI technology. In addition, Google offers a free tier. In my testing, the free tier of Gemini Pro was a pain (runs out of tokens fast) but the smaller Gemini Flash was effectively unlimited (assuming you dont fill up the 1 million token context window every minute). This huge context window for free is super useful for small repititive tasks which requires lots of context such as writing code using documentation.

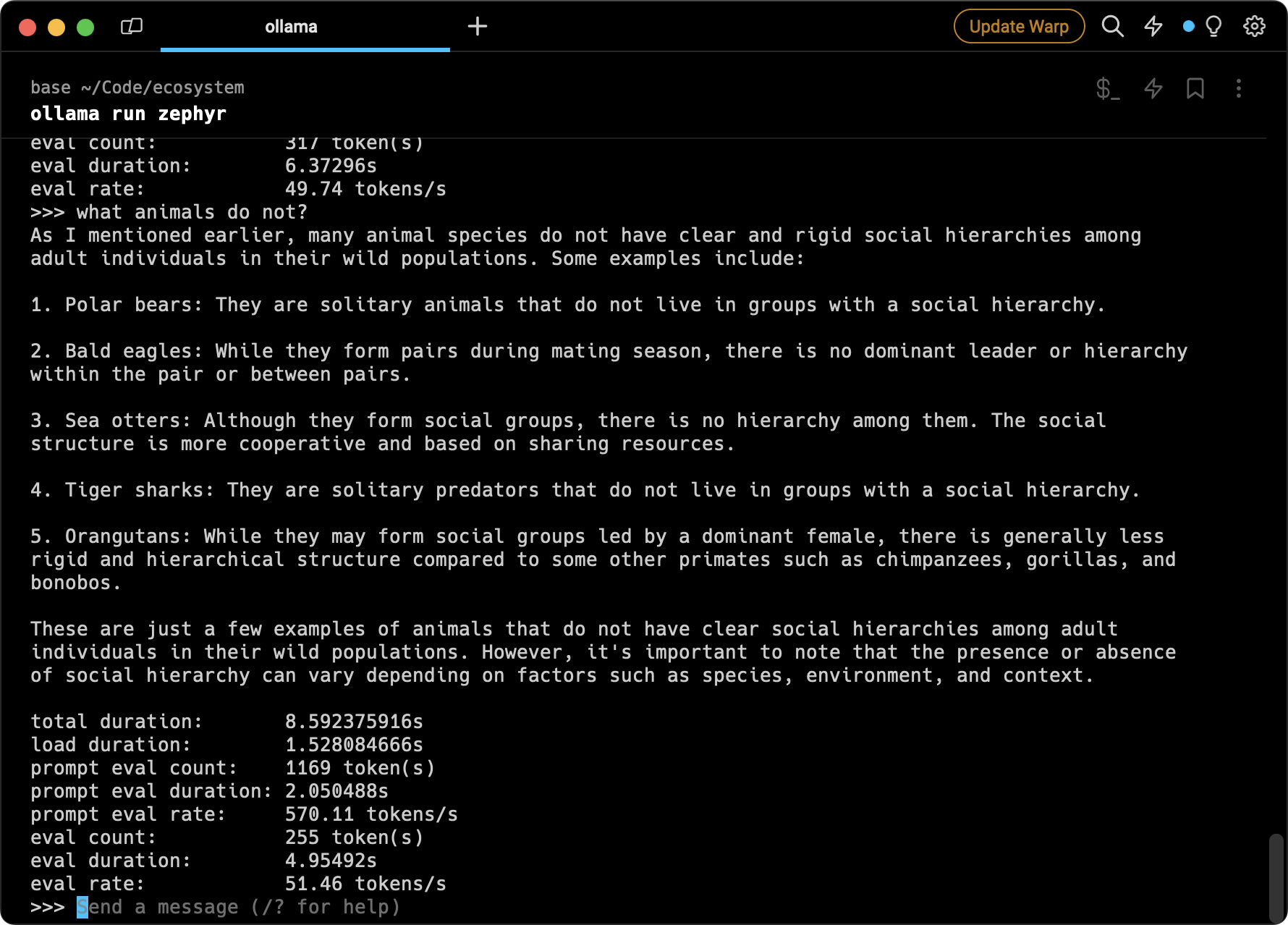

Ollama: For developers seeking a local and offline LLM solution, Ollama offers a compelling option. Ollama allows you to run a variety of pre-trained LLMs locally, ensuring privacy and reliable performance even without internet connectivity. I tried this with a variety of quantized and small models, many of which ran great on my 12GB GPU. Quantised models are less instructable but offer strong summarisation performance but full-precision distilled models such as the 2B parameter Gemma and Llama models are extremely compelling. If youre willing to take a hit on speed, I was also able to run 70B parameter models using CPU RAM but I would only do this if no internet connection is available.

Conclusion

Aider, combined with the rise of affordable and accessible LLMs like DeepSeek, Google Gemini, and Ollama, represents a paradigm shift in the AI coding landscape. This powerful combination empowers developers with a solution that notably doesnt slowdown exisiting workflows and processes. The ability to swap and change models on-the-fly enables users to make the compromise of speed vs accuracy vs price to suit whatever task is at hand.