Fragment based drug discovery is a powerful technique for finding lead compounds for medicinal chemistry. Crystallographic fragment screening is particularly useful because it informs one not just about whether a fragment binds, but has the advantage of providing information on how it binds. This information allows for rational elaboration and merging of fragments.

However, this comes with a unique challenge: the confidence in the experimental readout, if and how a fragment binds, is tied to the quality of the crystallographic model that can be built. This intimately links crystallographic fragment screening to the general statistical idea of a “model”, and the statistical ideas of goodness of fit and overfitting.

The field of statistics has developed very sophisticated techniques for approaching these questions: some of which have been used in crystallography historically, some which are currently being explored and others that have yet to find a home in this field.

Crystallographic model building

The output of experiments in macromolecular crystallography are diffraction patterns. These diffraction patterns correspond to a fourier transform of the electron density in the repeating unit cell of a crystal, however they typically lack the complex component of the transform: the phase.

While phasing initial data is in general a challenging problem for which the field has developed many techniques, in the context of crystallographic fragment screens, a reasonably strong model of the protein is typically already available that can be used to accurately estimate initial phases for new data.

Once reasonable phases are available, modelling typically requires manual intervention, with a crystallographer using a tool like Coot to fit the protein backbone and side chains into an electron density map calculated from the phased diffraction pattern. In crystallographic fragment screens typically the crystals are repeatable enough that only very minor modifications to the model are required, and automated methods are able to accomplish this.

In manual modelling however, this is typically where the issue of parameterisation of the model becomes apparent. With the reasonably accurately phased maps, it becomes possible to discern features such as:

- Partial occupancy of atoms

- Anisotropic temperature (“b”) factors

- Ordered waters

All of which require increasing the number of parameters used in the model. For high resolution, low noise datasets, the “sensible” choice of parameterization is typically obvious to humans, but for noisier datasets overfitting becomes a significant danger. Does electron density represent experimental noise or a low occupancy alternative confirmation? The answer can be unclear by eye, and humans are famously biassed builders of crystallographic models.

This is where crystallographers will typically turn to objective metrics of goodness of fit. Obviously the “gold” standard of fit is the extent to which a model explains the experimentally observed diffraction pattern. RWork, the difference between the predicted amplitudes and the observed ones is used to judge this.

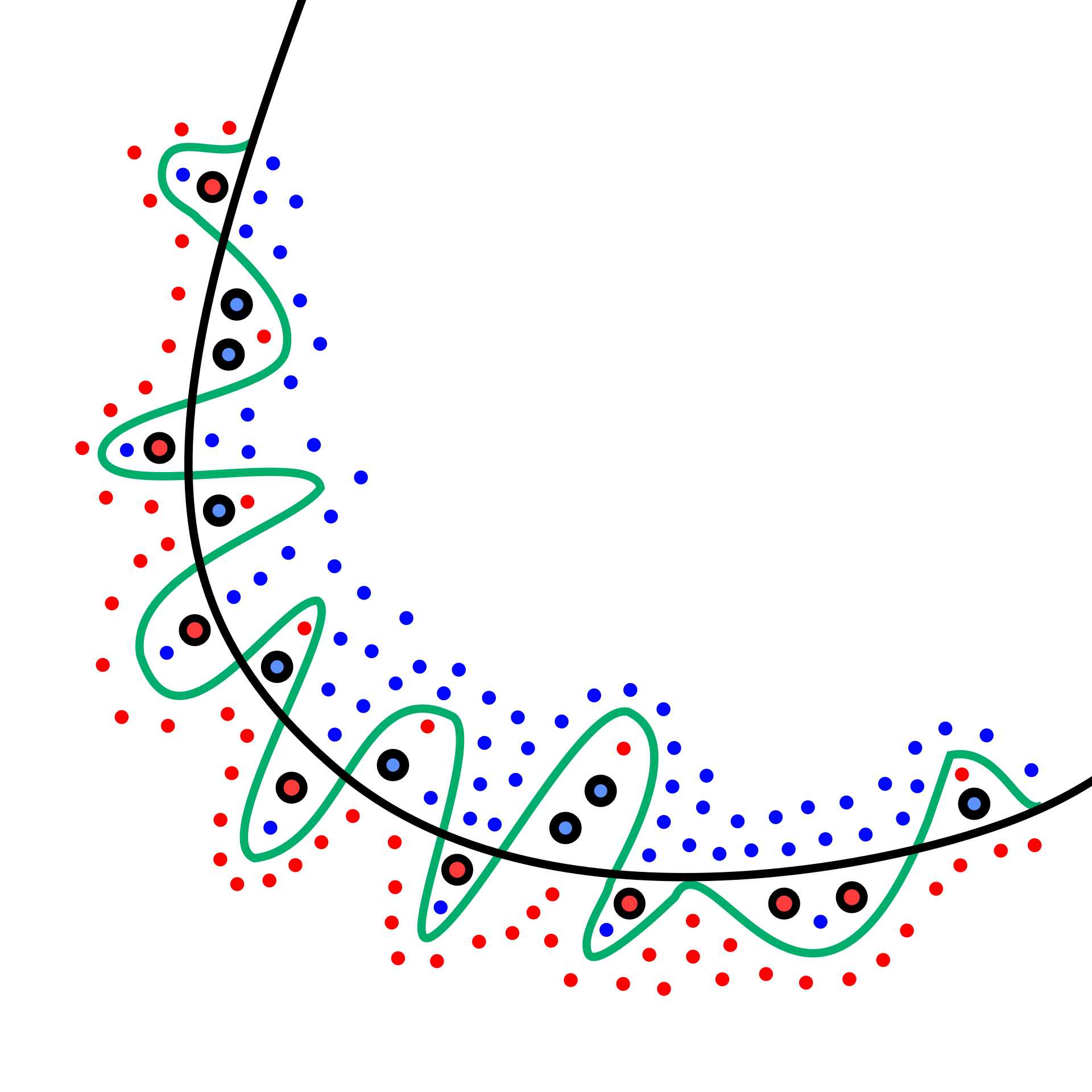

Unfortunately, while this explains the goodness of fit of the model, it has one very significant problem: the model has been optimized to this metric in refinement. With enough parameters available very good fits have become possible, but at the cost of fitting noise. Hence, our model is no longer “predictive” of what we might have observed in an identical experiment: we can no longer make confident statements about the underlying truth.

In crystallography, in particular in crystallographic fragment screening, this presents one very obvious problem: we have observed so many datasets, that if we are willing to allow the occupancy to be arbitrarily low we will be able to fit fragments to the noise “well”. This means our goodness of fit is no longer predictive of whether or not the fragment has actually bound, making the readout of our experiment useless in answering the question we were actually asking. The problem of bias is particularly pressing in crystallography because the phases are optimized, so overfitting is very easy.

Now, this is where cross validation comes in useful. By fitting the model to only a fraction of the data, but judging it based on data held out from the fitting process, we can get an estimate of its ability to predict new data. In crystallography this is given by the metric RFree (or more rarely RComplete).

Bringing statistical ideas and crystallographic ones together

And this would be where our story ends… except RFree doesn’t actually reliably discriminate between the “correct” and overparameterized models in synthetic or real data. It does particularly poorly for more noisy datasets: or rather unexpectedly poorly given that the information to discriminate isn’t actually present with sufficiently high noise [1].

In statistics this matches up to the idea of predictive accuracy: if we assume some underlying distribution generating our observations, then how accurately does our model reflect that underlying distribution? Does it accurately predict the probability of given results were we able to repeatedly sample? If our model is biased, then it may fit our initial observation perfectly but we might expect it to make worse predictions in general for repeated samples from the distribution.

So, we might ask what other techniques have been developed for model comparison in the field of statistics, and whether those might be applicable here. Fortunately, Likelihood based refinement models give us a powerful link between model comparison techniques in statistics and refined structures.

The information theoretic model comparison criteria AIC and BIC give a rigorous estimate of which of two statistical models will fit the data that could have been observed. By using the number of parameters in a model to penalise the likelihood we are able to decide which of several models better represents the underlying generating distribution of observed data.

These methods have been applied in the crystallographic context on real and synthetic data to do model selection more accurately than RFree [1]. Now, it is worth mentioning that this implementation does not accurately capture the degrees of freedom actually available to the model: it is difficult to estimate the effect of the restraints present in refining crystallographic models on the effective number of parameters actually available, hence doing even better should be possible.

The crystallographic fragment based drug discovery perspective

So, if information theoretic criteria are a promising avenue to making decisions between models, then all that remains is to apply them for making decisions on whether or not a fragment is present, right?

Well… no. Or at least, probably not. We still face a major issue.

In the functioning of modern refinement engines, it is very easy to “exchange” lower occupancies for higher b factors (and vice versa). For molecular fragments this is particularly problematic, as unlike the protein backbone we expect them to often be very low occupancy. In practice attempts to fit these models almost always rely on “fixing” the b factor in order to get a stable refinement. Is this fixing justified? Very unlikely, if the amount fragments move in molecular dynamics is to be believed. They are probably set implausibly low in order to smooth the likelihood surface enough to get stable refinements. This doesn’t even begin to address how one would practically build the model in order to refine it – even getting a starting point is likely impossible at low occupancies.

To address these issues, and make it possible to model any but the highest occupancy binders, Pan Density Dataset Analysis (PanDDA) was developed. PanDDA leverages the information available from the many similar datasets output by fragment screens in order to determine outliers, estimate occupancy and generate background corrected “event maps” maps that would simulate a unitary occupancy binder and hence be straightforward to model confidently.

Now, while Pan Density Dataset Analysis will often allow us to make confident models by eye in event maps, the process of getting to them has broken the link between the experimental data and the crystallographic model. This means that although we can build a model that is confident according to PanDDA, refining it confidently is often impossible, and hence our desired output of “is the fragment there” now has two answers: PanDDA says yes based on evidence from many datasets interpreted by the human eye, conventional crystallography (RFree) might say no (or at least “not with any great confidence”) based on the evidence from one dataset.

Now, beyond methodological concerns that fitting to a non-physical (albeit still well reasoned) map is now the metric on which the experimental output is determined, this has a practical problem: by separating ourselves from the tools of conventional crystallography we have lost the power to quantify our confidence numerically. While information theoretic methods provide the potential for making decisions between models based on likelihood, that likelihood is output by a refinement engine that we know is struggling to fit the model. That likelihood function also does not account for all the information gained from other datasets and hence is necessarily dramatically less confident in any fragment build, and must underestimate the plausibility of the build versus a human.

Today, confidence in a PanDDA hit is a crystallographer saying that the ligand fits in the hole, and the interactions with the protein look plausible. This is a far cry from the rigour normally associated with crystallography, and once again invites the (in this field known to be famously strong) human bias into our experimental readout. This shows that information theoretic criteria also fail to capture one of the most powerful priors that humans base their decisions on in PanDDA maps: are the interactions between the protein and ligand plausible. This is not so much a flaw of the criteria though as of the likelihood functions in refinement engines not capturing this information. Hence it is unclear how much can be gained by validating models of fragment binding events by these criteria.

This greatly complicates automating the output of the experiment. If the only metric thought to be reliable is the human eye, then we cannot get a machine to make these decisions. With the massive increase in scale of these experiments, that is likely to soon no longer be acceptable. Fortunately, enormous amounts of manually annotated data are available. Although this does not go any way towards assisting likelihood based model comparison, it does hold promise for training classifiers to make empirically justified decisions on the “truth” of a fragment model in a PanDDA event map.

Summary

Fragment based crystallographic screening is an enormously powerful tool for medicinal chemistry, with a uniquely rich read-out for informing the decisions in downstream drug development. However, there still seem to be fundamental holes in the assessment of experimental results. The current best solution is to have a human look at the model in a PanDDA event map, but this severely limits the potential to automate building or validation and falls prey to the infamous “ligand of desire” bias.

That said, there is great promise for a solution to the question of validating these models: information theoretic criteria from statistics may prove powerful, rich priors on plausible interactions between the protein and ligand are yet unexploited and vast amounts of carefully annotated data could be used to train empirical methods to reproduce the human decisions that are the current gold standard. As such it is not difficult to imagine a world where models of these experiments can be built and validated without human intervention.

References

[1]

Model Selection for Biological Crystallography

Nathan S. Babcock, Daniel A. Keedy, James S. Fraser, David A. Sivak

bioRxiv 448795; doi: https://doi.org/10.1101/448795

All images in this blog were borrowed from elsewhere on the internet!